Artificial Intelligence (AI) has the potential to revolutionize the world we live in. It can streamline processes, improve efficiencies, and solve complex problems in ways that were previously unimaginable. From healthcare to finance, the possibilities of AI are endless. However, with great power comes great responsibility.

While the benefits of AI are undeniable, the ethics of its use are becoming more and more complicated. As we continue to rely on AI for critical decision-making processes, it is crucial that we consider the ethical implications of its use. Without proper consideration and oversight, AI can exacerbate existing societal issues and perpetuate harmful biases.

This ethics quandary is further complicated by the fact that AI is only as good as the data that it is trained on. This means that if we feed AI biased or incomplete data, it will produce biased or incomplete results. As a result, the power and perils of data must be thoroughly examined when considering AI’s ethical implications.

Given the scope of AI’s impact on society, there is a significant burden of responsibility on those who design, develop, and deploy AI systems. It is essential to ensure that AI is designed and implemented in a manner that is equitable and fair for all.

Therefore, the paradox of control arises when we consider the balance between AI’s autonomy and human oversight. How do we ensure that AI systems are making ethical and responsible decisions, without impeding their capacity for innovation and learning?

Overall, the introduction of AI presents an opportunity for significant progress and positive change, but only if we address the critical ethical issues associated with its use. In the following sections, we will explore the power and perils of data and the responsibility burden of AI systems. We will also examine the paradox of control and look towards best practices for implementing ethical AI.

The Power and Perils of Data: AI’s Reliance on Information

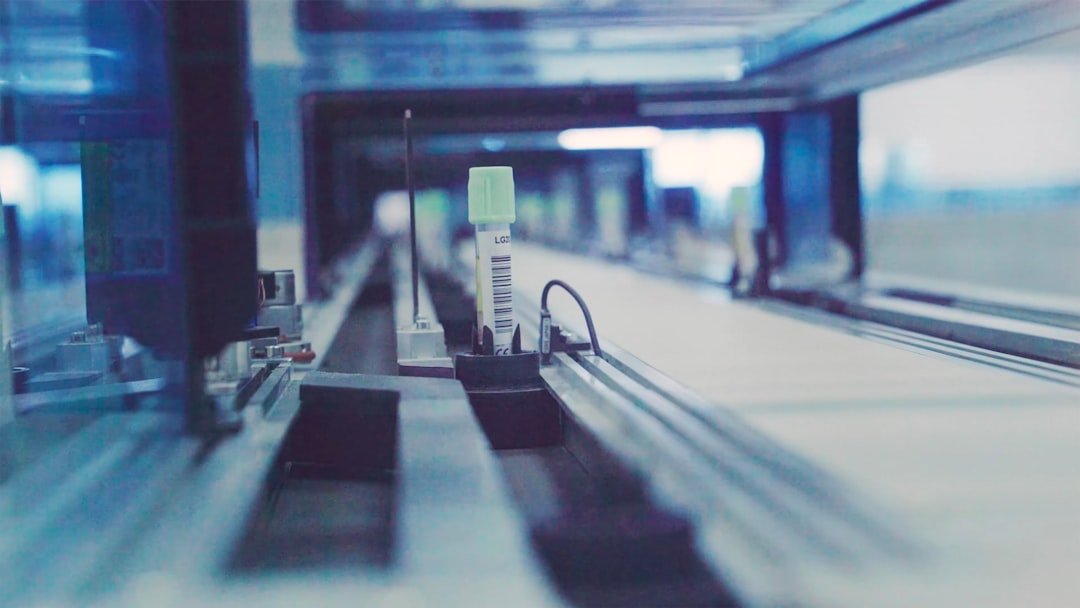

Artificial intelligence (AI) is a powerful technology that has the potential to greatly impact our daily lives, from healthcare to transportation, communication to entertainment. One of the critical components of AI is data, which is the fuel that powers machine learning and deep learning algorithms. Without high-quality data, AI would not be able to learn and improve, let alone make decisions that affect human lives.

However, the reliance on data also poses significant ethical challenges. The quality, quantity, and diversity of data can impact the accuracy and fairness of AI systems. Biased and incomplete data can lead to discriminatory and prejudicial decisions, perpetuating existing social problems and inequalities. Furthermore, data privacy and security have become major concerns as the amount of data collected by AI systems grows exponentially.

There are also concerns about the sources of data used in AI systems. Many companies and organizations collect data from a variety of sources, including social media, internet searches, and consumer behavior. This mass collection of data raises concerns about user consent, data ownership, and ultimately, who benefits from the use of this data. With the potential for AI to generate massive profits for corporations, there is a fear that data may be used solely for corporate gain and not for the good of society or the individual.

It is vital that we address these ethical quandaries in the development and deployment of AI technologies, ensuring that the power of data is harnessed responsibly and ethically. This means identifying and eliminating biases in data collection and curation, adhering to privacy regulations and standards, and promoting transparency and accountability in AI decision-making processes.

In conclusion, the reliance of AI on data highlights the need for ethical considerations and practices in the development, implementation, and use of AI technologies. Ensuring that AI systems are designed and deployed both effectively and responsibly can help address societal challenges and ensure that the potential benefits of AI are realized.

Furthermore, data privacy and security have become major concerns as the amount of data collected by AI systems grows exponentially.

The Responsibility Burden: AI’s Impact on Society

As AI continues to advance and become more integrated into our daily lives, one of the biggest questions that we need to ask ourselves is what kind of impact it is having on society as a whole. The development of AI technology and its integration into various sectors has brought with it a significant responsibility burden for companies, governments and society at large to ensure that it is used ethically and responsibly.

One of the primary concerns is the impact of AI on employment. As AI technology becomes more advanced and integrated into different industries, there is a risk that it could lead to significant disruptions in the job market. While AI has the potential to make many tasks more efficient and accurate, it could also lead to significant job displacement, particularly in industries where repetitive tasks are common. As such, there is a need for companies and governments to take a responsible approach to the development and implementation of AI technology to ensure that it does not have a negative impact on employment.

Another key concern is the potential for AI to perpetuate biases and discrimination. While AI may seem impartial and objective, it is only as unbiased as the data it is trained on. If the data used to train AI systems is biased, then it is highly likely that the AI system will also exhibit bias when making decisions. This could result in discriminatory outcomes, particularly in areas such as hiring, lending and criminal justice. The responsibility burden falls on companies to ensure that their AI systems are trained on unbiased data and that they are regularly audited to ensure that they are not perpetuating any biases.

AI also has the potential to exacerbate existing social inequalities. As AI technology becomes more integrated into society, those who have access to it and can afford it will likely gain a significant advantage over those who do not. This could lead to a widening of the economic divide and further marginalization of vulnerable groups. To address this concern, there needs to be a concerted effort to ensure that the benefits of AI are distributed fairly and equitably across society.

In addition to these concerns, there are also questions around accountability and transparency. As AI becomes more autonomous, it becomes increasingly difficult to understand how and why decisions are being made. This could make it challenging to hold companies and institutions accountable for the decisions made by their AI systems. There is a need for greater transparency and accountability in the development and deployment of AI systems to ensure that they are being used ethically and responsibly.

Overall, the responsibility burden of AI falls on everyone involved in its development and implementation. It is essential that companies, governments and society at large take a collaborative approach to ensure that AI is used ethically, responsibly, and with fair distribution of benefits across society. By doing so, we can help to ensure that AI continues to advance in a way that is both beneficial and sustainable for everyone.

While AI may seem impartial and objective, it is only as unbiased as the data it is trained on.

The Paradox of Control: AI’s Autonomy and Human Oversight

The development of artificial intelligence has led to the creation of intelligent systems that can make decisions on their own, without human intervention. This autonomy, while impressive, raises concerns about who or what is in control. At its core, AI poses a paradox- it is designed to operate independently, yet it still requires human oversight.

One of the biggest challenges in developing autonomous AI systems is ensuring that they behave in ways that are predictable, safe, and ethical. As AI becomes more advanced and capable of performing increasingly complex tasks, the need for human intervention and control becomes more apparent. We must establish methods of oversight that are effective in ensuring that AI operates within acceptable parameters, and that the benefits of autonomy are maximized while minimizing the risks.

One aspect of the paradox of control is that AI systems can exhibit unpredictable behavior. This unpredictability is due to the complex algorithms and decision-making processes used by AI, which can be difficult for humans to understand. When an AI system behaves in a way that we don’t expect or understand, it can be difficult to determine what course of action to take. This is why it is important to develop methods of oversight that are capable of detecting and managing potential risks.

Another aspect of the paradox of control is that AI systems are only able to operate within the bounds of their programming. Unlike humans, AI systems are unable to make ethical judgments or to engage in critical thinking in the same way that humans can. This means that AI systems can only make decisions based on the parameters set by their creators. As a result, it is crucial to ensure that these systems are programmed to operate within ethical boundaries.

The paradox of control also raises concerns about who or what is ultimately responsible for the actions of an autonomous AI system. Is it the AI system itself, or the human creators who programmed it? This question has significant implications for the legal and ethical frameworks that are necessary to govern the use of AI. It is essential that we address this issue in a thoughtful and comprehensive manner, to ensure that AI is developed and used in ways that are safe, ethical, and beneficial for all.

In conclusion, the paradox of control is a critical issue facing the development and implementation of AI systems. While the autonomy of AI provides unprecedented opportunities for innovation and progress, it also poses significant challenges. Effective methods of oversight and control are necessary to ensure that AI operates within ethical boundaries, and that the benefits of autonomy are maximized while minimizing risks. It is the responsibility of all stakeholders to address this issue in a comprehensive and thoughtful manner.

While the autonomy of AI provides unprecedented opportunities for innovation and progress, it also poses significant challenges.

The Path to Ensuring Ethical AI: Implementation and Best Practices

Artificial intelligence (AI) has infiltrated various industries and shows no signs of slowing down. However, the challenge of ensuring ethical AI has become a pressing issue. Many experts have raised concerns about the ethics quandary that AI poses. While an AI system can perform tasks faster and more accurately than humans, its decisions may not always align with societal values. As a result, the discussion around ethical AI has become increasingly necessary, and it is vital to consider how to implement ethical AI practices.

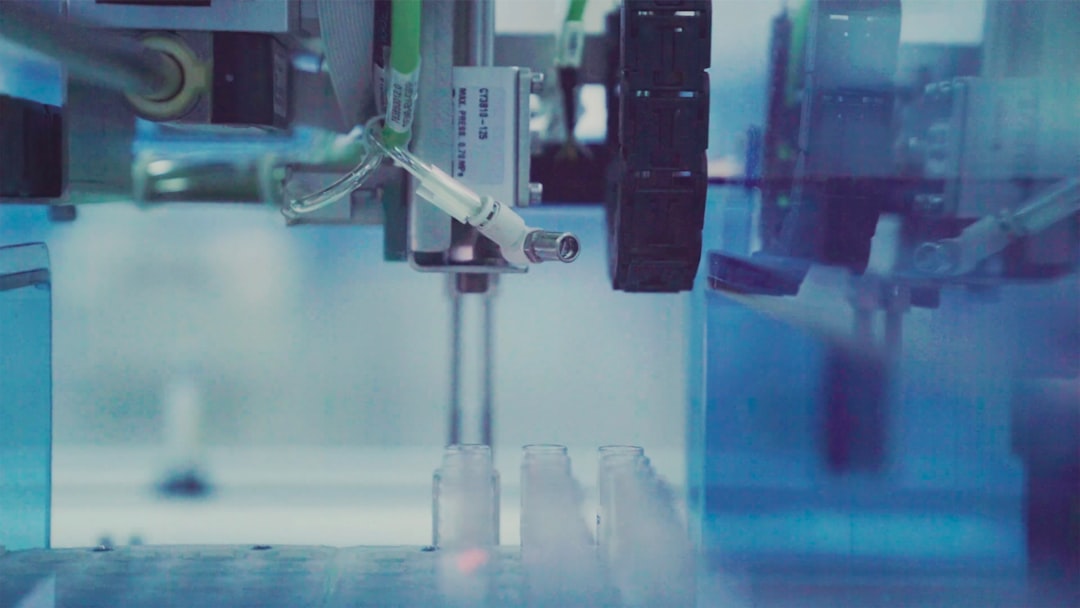

To ensure ethical AI, it is best to start at the inception of an AI system. Data is the foundation of any AI system, and it is vital to ensure that the data used aligns with ethical standards. The quality of data, particularly in the collection and aggregation phase, should be thoroughly assessed to identify and resolve potential data biases. Additionally, the development team should ensure that the data used is free from any discriminatory attributes such as gender or race-based criteria, as well as offensive language and content.

Self-learning algorithms, which are integral to AI systems, require continuous monitoring and testing. This practice will help to identify any unexpected outcomes and biases, ensuring the AI system operates within ethical boundaries. Another way to ensure that AI systems are ethical is to develop regulation and governance frameworks. Governments and regulatory authorities can play a vital role in establishing guidelines and regulations that promote ethical AI practices.

Moreover, collaborations between stakeholders such as tech practitioners, policymakers, and non-governmental organizations can shed light on critical applications of AI systems that demand ethical considerations. Such collaborations can provide a framework for developing and implementing AI systems with the ethical power to enable social good rather than an outcome in two ways.

First, ethical AI development and implementation can open up new market opportunities for innovative ideas that promote equality and help tackle global societal and environmental issues. Second, firms or organizations can benefit from the use of AI systems without facing legal, regulatory, social or governance implications.

In conclusion, while AI presents an opportunity to ease our burdens and enhance services across various industries, it poses complex ethical challenges. The path toward ensuring ethical AI practices involves developing and upholding ethics frameworks consisting of government regulations, collaborations with tech practitioners, policymakers, and civil societies, and implementing transparent AI systems from the onset, among others. By taking these steps towards an ethical approach to AI, we can ensure that the benefits of AI are widely felt, and the technology is developed and used responsibly.

Data is the foundation of any AI system, and it is vital to ensure that the data used aligns with ethical standards.

Conclusion: Moving Forward with Ethics as a Key Driver

As we have explored in the previous sections, the potential of AI is great, but it comes with a significant amount of ethical quandaries. While AI can revolutionize industries and enhance our daily lives, there is a responsibility burden that comes with its implementation. As the power and perils of data continue to grow, it is essential that we consider the social impact of AI and integrate ethical considerations into its development and implementation.

To ensure that AI is developed and implemented with ethics at the forefront, there needs to be a concerted effort from all stakeholders involved. This includes regulatory bodies, AI developers, and society at large. We must recognize that AI has the power to shape the future of our world, and as such, we should prioritize the principles of transparency, accountability, and fairness in its development.

In moving forward with ethical AI, it is imperative that we regularly evaluate the ethical implications of AI systems and enforce strong ethical standards. Organizations should take a proactive approach to monitor the impacts of AI technology on society and implement measures to mitigate potential biases that may surface. In addition, society at large must have a fundamental understanding of the impact AI has and engage in discussions about the ethical considerations surrounding its use.

Overall, we must strive to build ethically sound AI that is aligned with our values as a society. As technology continues to advance, we must ensure that it aligns with our principles and aspirations for a better world. By prioritizing ethics in the development and implementation of AI, we can maximize its potential while minimizing its negative impact on society.

Conclusion: Moving Forward with Ethics as a Key Driver

As we have explored in the previous sections, the potential of AI is great, but it comes with a significant amount of ethical quandaries.

The Importance of Continuous Ethics Assessment in AI Development

As we discussed in the previous sections, artificial intelligence has enormous potential to transform nearly every aspect of our lives. However, this technology comes with a significant responsibility burden that must be addressed to prevent misuse and harmful consequences.

One of the key ethical dilemmas associated with AI is the issue of inherent bias in algorithms. AI is only as good as the data it is trained on, and if the data sets used to train AI are biased, then the resulting technology will also be biased. This means that it could perpetuate harmful stereotypes, reinforce systemic inequalities, or even make decisions that lead to real-world harm.

There is also the potential for AI to erode privacy and data security rights, enabling corporations and governments to amass and use vast amounts of personal data for unethical purposes. Furthermore, the risk of AI-enhanced automation leading to widespread job displacement cannot be ignored.

Given these ethical quandaries, it is essential to continuously assess the ethical implications of AI development. This assessment should be an ongoing process that starts at the beginning of any project and continues throughout the technology’s lifecycle.

One promising approach to ensure ethical AI is through the implementation of regulation and governance frameworks. For example, countries such as the EU have proposed comprehensive legislation like the AI Act, which aims to create a harmonized and supportive legal framework for AI use across the European Union.

Additionally, it is important to prioritize AI education from an early age, as well as the inclusion of diverse ethics and governance experts in AI development teams. This diversity can help ensure a range of perspectives are incorporated into the creation of AI systems, reducing the potential for inherent bias and ensuring that all ethical implications are considered.

There is still much work to be done in developing ethical AI, and it is a critical issue that should remain at the forefront of our minds as we continue to innovate and develop this groundbreaking technology. By continually assessing ethical ramifications and prioritizing diverse and inclusive workforces, we can ensure that AI is used responsibly to benefit humanity as a whole.