Artificial Intelligence (AI) has been a buzzword for quite some time now. The term refers to the ability of machines to perform tasks that typically require human intelligence. It involves the development of algorithms and computer programs that can simulate human reasoning and perception, with the goal of creating machines that can think and act like humans.

In recent years, AI has experienced rapid growth, with significant advancements in machine learning, natural language processing, and computer vision. These advancements have led to the development of intelligent systems that can perform complex tasks with increased efficiency and accuracy.

The potential benefits of AI are vast, ranging from increased productivity and cost savings to improved healthcare and education. However, with these benefits come significant risks and ethical considerations that must be addressed.

As we continue to push the boundaries of AI, it is essential to understand its potential impact on society and to develop responsible strategies for its development and deployment. In the following sections, we will explore the benefits, risks, ethical considerations, and regulatory frameworks for AI.

The Benefits of AI: Increased Efficiency and Accuracy

As AI technology continues to advance, it has become increasingly clear that the benefits of AI are numerous and far-reaching. One of the primary benefits of AI is increased efficiency. AI systems are able to perform tasks much more quickly and accurately than humans, which can save businesses and organizations a significant amount of time and money.

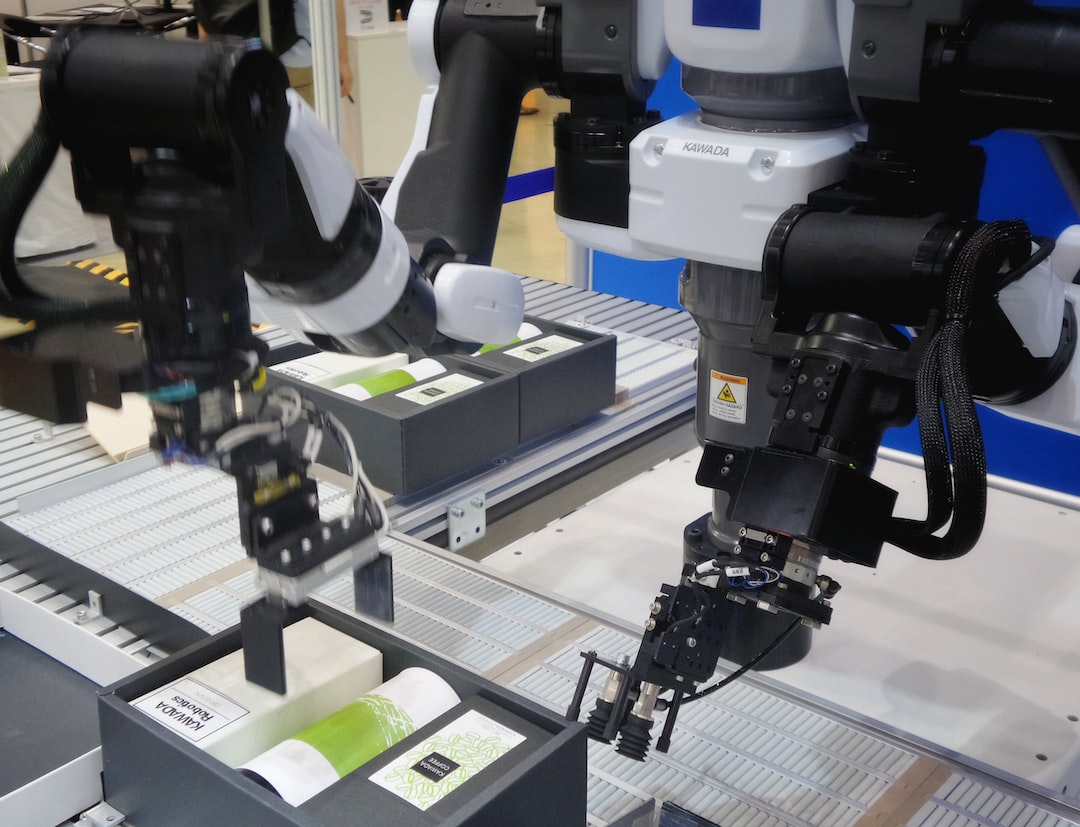

For example, in the healthcare industry, AI-powered systems can help doctors and nurses diagnose illnesses more quickly and accurately. This can lead to better patient outcomes and a more efficient use of resources. Similarly, in the manufacturing industry, AI-powered robots can perform repetitive and dangerous tasks with greater precision and speed than humans, reducing the risk of injury and improving production efficiency.

Another major benefit of AI is increased accuracy. AI systems are able to process vast amounts of data and make highly accurate predictions based on that data. This can be incredibly valuable in fields such as finance, where AI-powered systems can help identify potential risks and opportunities in the stock market.

AI can also be used to improve customer service. Chatbots and virtual assistants powered by AI can provide customers with fast and accurate responses to their inquiries, improving their overall experience and satisfaction.

Overall, the benefits of AI are clear. By increasing efficiency and accuracy, AI has the potential to improve a wide range of industries and make our lives easier and more productive. However, it is important to also consider the potential risks and ethical implications of AI, which we will explore in the next section.

Another major benefit of AI is increased accuracy.

The Risks of AI: Job Displacement and Bias

AI has undoubtedly brought about a multitude of benefits, but it is not without its risks. One of the most significant risks associated with AI is job displacement. As AI continues to advance, it has the potential to automate many jobs that are currently performed by humans. This could lead to significant job losses in certain industries, particularly those that rely heavily on manual labor or routine tasks.

The displacement of jobs is not a new concept, but the speed at which AI is advancing has raised concerns about the ability of workers to adapt to new roles and industries. While some argue that AI will create new jobs and industries, there is no guarantee that these new opportunities will be accessible to those who have been displaced.

Another risk associated with AI is bias. AI systems are only as objective as the data they are trained on, and if that data is biased, then the AI system will be biased as well. This is particularly concerning when it comes to areas such as hiring and lending decisions, where biases could lead to discrimination against certain groups of people.

It is important to recognize that bias in AI is not always intentional, but rather a result of the data used to train the system. This highlights the need for diverse and representative data sets in the development of AI systems.

Overall, it is clear that AI has the potential to bring about significant benefits, but it is important to consider and address the risks associated with its development and deployment. Job displacement and bias are just two of the many risks that must be carefully examined and managed in order to ensure that the benefits of AI are realized in a responsible and ethical manner.

AI systems are only as objective as the data they are trained on, and if that data is biased, then the AI system will be biased as well.

Ethical Considerations for AI: Transparency and Accountability

As AI continues to rapidly advance, it is important to consider the ethical implications of its development and deployment. One of the most crucial aspects of AI ethics is transparency. It is imperative that AI systems are designed and developed in a way that is open and transparent to users, regulators, and other stakeholders.

Transparency in AI means that the decision-making processes of the system are clear and understandable. Users should be able to understand how the system arrived at its decisions and what factors were considered. This is particularly important in applications where AI is used to make decisions that have significant impacts on people’s lives, such as in healthcare, finance, and criminal justice.

Another important ethical consideration for AI is accountability. As AI systems become more complex and autonomous, it is important to ensure that there is a clear chain of responsibility for their actions. This means that developers, manufacturers, and users should be held accountable for any harm caused by the system.

One way to ensure accountability is through the use of ethical frameworks and guidelines. These frameworks can help developers and users understand the ethical implications of AI and provide guidance on how to design and use AI in an ethical manner. For example, the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems has developed a set of ethical guidelines for AI that emphasize transparency, accountability, and human values.

In addition to ethical frameworks, there is also a need for regulatory oversight of AI development and deployment. Governments and other regulatory bodies can play an important role in ensuring that AI is developed and used in a responsible and ethical manner. This can include setting standards for transparency and accountability, as well as enforcing penalties for non-compliance.

Overall, ethical considerations for AI are complex and multifaceted. Transparency and accountability are just two of the many factors that must be considered in the development and deployment of AI. By working together, developers, users, regulators, and other stakeholders can ensure that AI is developed and used in a way that is ethical, responsible, and beneficial to society.

This is particularly important in applications where AI is used to make decisions that have significant impacts on people’s lives, such as in healthcare, finance, and criminal justice.

The Role of Government in Regulating AI

As we have discussed in the previous sections, AI is a powerful tool with numerous benefits and risks. It is clear that AI has the potential to transform our world, but it is equally clear that we need to approach its development and deployment with caution. One of the key players in this process is the government.

The government has a crucial role to play in regulating AI. This is because AI has the potential to impact society in a number of ways, from job displacement to bias in decision-making. It is the responsibility of the government to ensure that AI is developed and deployed in a way that benefits society as a whole, rather than just a select few.

One of the primary ways in which the government can regulate AI is through legislation. There are a number of different laws and regulations that could be put in place to ensure that AI is developed and deployed in an ethical and responsible manner. For example, the government could require companies to be transparent about the data they are using to train their AI algorithms, or it could establish guidelines for the use of AI in hiring and firing decisions.

In addition to legislation, the government can also play a role in funding research and development in AI. This can help ensure that AI is being developed in a way that is beneficial to society, rather than just for the sake of profit. The government can also invest in education and training programs to help prepare the workforce for the changes that AI is likely to bring about.

However, it is important to note that there are also risks associated with government regulation of AI. For example, overly restrictive regulations could stifle innovation and hinder the development of AI. It is important to strike a balance between ensuring that AI is developed and deployed in a responsible manner, while also allowing for innovation and progress.

The government has a crucial role to play in regulating AI. It is important for the government to strike a balance between ensuring that AI is developed and deployed in a responsible manner, while also allowing for innovation and progress. By working together with the private sector and other stakeholders, we can ensure that AI is developed in a way that benefits society as a whole.

One of the key players in this process is the government.

Corporate Responsibility in Developing and Deploying AI

When it comes to the development and deployment of artificial intelligence, corporations have a critical role to play in ensuring that the technology is used for the betterment of society. As companies continue to invest in AI, they must also consider the potential risks and ethical concerns that come with it.

One of the most significant responsibilities of corporations is to ensure that their AI systems are transparent and accountable. This means that companies must be able to explain how their AI systems make decisions and provide evidence to support those decisions. Transparency is especially important in areas such as healthcare and finance, where the consequences of AI decisions can be life-altering.

Another critical responsibility of corporations is to address the issue of bias in AI. AI systems are only as unbiased as the data they are trained on. If the data used to train an AI system is biased, the system will be biased as well. This can lead to discriminatory outcomes, such as denying loans or jobs to certain groups of people. Companies must take steps to ensure that their AI systems are trained on unbiased data and are regularly audited to detect and correct any biases that may emerge.

In addition to transparency and bias, corporations must also consider the potential impact of AI on jobs. While AI has the potential to increase efficiency and accuracy, it also has the potential to displace workers. Companies must be mindful of this and take steps to retrain and reskill workers who are at risk of losing their jobs due to AI.

Finally, corporations must consider the broader societal impacts of their AI systems. They must ask themselves whether their AI systems are truly benefiting society or whether they are simply serving the interests of the company. Companies must also consider the potential unintended consequences of their AI systems and take steps to mitigate any negative impacts.

Overall, the development and deployment of AI is not just a technical issue but also an ethical and societal one. Corporations must take responsibility for ensuring that their AI systems are transparent, unbiased, and beneficial to society as a whole. This requires a commitment to transparency, accountability, and a willingness to address the potential risks and ethical concerns that come with AI.

Companies must take steps to ensure that their AI systems are trained on unbiased data and are regularly audited to detect and correct any biases that may emerge.

Conclusion: Finding a Balance between Progress and Responsibility in the Development of AI

As we have seen throughout this blog post, AI has the potential to revolutionize many aspects of our lives, from increasing efficiency and accuracy to driving innovation and creating new industries. However, it is important to recognize that with this potential comes significant risks and ethical considerations that must be taken into account.

While governments and corporations have a critical role to play in regulating and deploying AI responsibly, ultimately it is up to all of us to ensure that this technology is used in a way that benefits society as a whole. This means prioritizing transparency, accountability, and fairness in the development and deployment of AI, while also recognizing the potential for unintended consequences and taking steps to mitigate these risks.

At the same time, it is important not to stifle innovation or progress in the name of caution. AI has the potential to solve some of the world’s most pressing challenges, from climate change to healthcare, and it is only by embracing this technology that we can hope to address these issues effectively.

In conclusion, finding a balance between progress and responsibility in the development of AI is no easy task, but it is one that we must undertake if we are to realize the full potential of this technology. By working together and prioritizing the needs of society as a whole, we can ensure that AI is used in a way that benefits everyone and helps to create a better, more equitable future for all.