Coding, the art of creating instructions for computers to follow, has a rich and fascinating history that stretches back decades. To truly appreciate the incredible advancements we see in coding today, it is essential to understand its origins and how it has evolved over time.

In the early days of computing, coding was a laborious and time-consuming process. It involved using punch cards, a method developed in the late 19th century for controlling textile looms. These punch cards, made of stiff paper or cardboard, contained a series of holes that represented data or instructions. Programmers would carefully punch holes into these cards to create a sequence of commands for the computer.

The breakthrough came with the development of assembly language, a low-level programming language that allowed programmers to write instructions using mnemonic codes that represented specific machine operations. Assembly language made coding more accessible and efficient, as programmers no longer needed to directly manipulate the hardware. Instead, they could use human-readable instructions that translated into machine code.

However, assembly language still had its limitations. It was specific to each type of computer architecture, making programs written in one assembly language incompatible with others. This lack of portability led to the need for higher-level programming languages.

Enter the 1950s, a time when high-level programming languages began to emerge. These languages, such as FORTRAN, COBOL, and BASIC, were designed to be more user-friendly and portable. FORTRAN, short for “Formula Translation,” was one of the earliest high-level languages and was primarily used for scientific and engineering calculations. COBOL, or “Common Business-Oriented Language,” catered to business applications, while BASIC, or “Beginner’s All-purpose Symbolic Instruction Code,” aimed to make programming accessible to beginners.

These high-level languages provided programmers with powerful tools to write complex programs more efficiently. They introduced concepts like variables, loops, and conditional statements, making coding less cumbersome. With the ability to write programs that were easier to read and understand, programmers could now focus on solving problems instead of getting lost in the intricacies of assembly language.

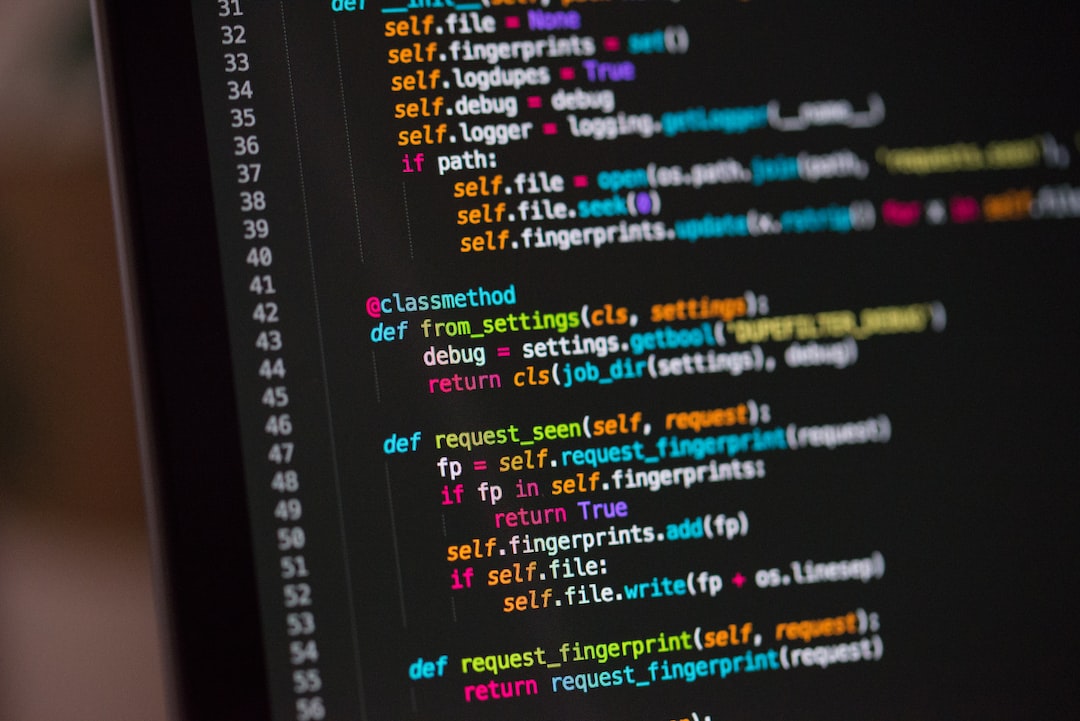

As the years went by, the demand for more versatile and flexible programming languages grew. This led to the emergence of object-oriented programming (OOP) languages like C++, Java, and Python. Object-oriented programming revolutionized software development by introducing the concept of objects, which encapsulate both data and the operations that manipulate it.

C++, developed in the late 1970s, combined the efficiency of low-level languages with the benefits of high-level languages. It allowed programmers to create reusable code through the use of classes and introduced features like inheritance and polymorphism.

Java, introduced in the mid-1990s, brought the concept of platform independence to the forefront. Its “write once, run anywhere” philosophy made it a popular choice for cross-platform development. With its extensive libraries and robust security features, Java became a staple in enterprise applications.

Python, a versatile and beginner-friendly language, gained popularity in the early 2000s. Known for its readability and simplicity, Python became the go-to language for web development, scientific computing, and artificial intelligence applications.

With the advent of the internet, coding took on a whole new dimension. Web development became a crucial aspect of coding as websites and web applications became integral to our daily lives. Dynamic languages like PHP, JavaScript, and Ruby gained prominence, enabling developers to create interactive and responsive web experiences.

As we look to the future, coding continues to evolve and adapt to meet the demands of an ever-changing technological landscape. Machine learning and artificial intelligence are pushing the boundaries of what coding can achieve. Developers are now tasked with creating intelligent systems that can learn, reason, and make decisions on their own.

In conclusion, the origins of coding took us from punch cards to assembly language, and from there, we witnessed the rise of high-level programming languages, object-oriented programming, and the internet age. Now, we find ourselves at the forefront of machine learning and artificial intelligence. The future of coding is bright and full of possibilities, and with each passing day, new innovations and technologies will continue to shape the world of coding as we know it. So, whether you’re a seasoned programmer or just starting on your coding journey, embrace the ever-evolving nature of coding and let your imagination soar.

The Origins of Coding: From Punch Cards to Assembly Language

Coding, also known as programming, is the backbone of modern technology. It enables us to create software, build websites, develop apps, and power the digital world we live in today. But have you ever wondered where coding originated from? In this section, we will dive into the early days of coding and explore how it has evolved over time.

The journey of coding began long before computers were even a part of our lives. It can be traced back to the early 19th century when Charles Babbage, an English mathematician, conceptualized the idea of a programmable machine called the Analytical Engine. Although Babbage’s dream of building the Analytical Engine never came to fruition, his work laid the foundation for future developments in coding.

Fast forward to the mid-20th century, and we witness the birth of modern coding techniques. During this time, computers relied on punch cards as the primary means of input and output. These cards contained patterns of holes that represented data and instructions for the computer to execute. Programmers would manually punch holes in these cards to write their code.

However, coding using punch cards was a tedious and error-prone process. A single mistake in punching or arranging the cards could result in hours of debugging. Thankfully, advancements in technology led to the development of assembly language, which simplified the coding process.

Assembly language is a low-level programming language that uses mnemonic codes to represent machine instructions. Programmers could now write code using human-readable instructions that were then translated into machine code by an assembler. This made coding more accessible and reduced the likelihood of errors.

The introduction of assembly language marked a significant milestone in coding history. It paved the way for the development of higher-level programming languages, which brought even more flexibility and efficiency to the coding process.

The origins of coding can be traced back to the visionary ideas of Charles Babbage and the early use of punch cards as a means of programming. From these humble beginnings, coding has evolved to become a crucial aspect of our modern technological landscape. In the next section, we will explore the rise of high-level programming languages such as FORTRAN, COBOL, and BASIC, which further revolutionized the coding world.

The Rise of High-Level Programming Languages: FORTRAN, COBOL, and BASIC

When it comes to understanding the history of coding, it’s essential to explore the significant shift that occurred with the rise of high-level programming languages. These languages brought about a level of abstraction that allowed developers to write code more efficiently, making programming accessible to a broader audience. In this section, we will delve into the origins and impact of three influential high-level programming languages: FORTRAN, COBOL, and BASIC.

FORTRAN: Revolutionizing Scientific Computing

In the early days of computing, the predominant programming language was machine language, which required programmers to write instructions using binary code. However, this low-level approach was time-consuming and prone to errors. Recognizing the need for a more efficient solution, John W. Backus and his team at IBM developed FORTRAN (short for “The IBM Mathematical Formula Translating System”) in the 1950s.

FORTRAN was the first high-level programming language and proved to be a game-changer for scientific and engineering communities. It introduced a simplified syntax that made it easier for scientists to express mathematical and scientific equations directly in their code. This breakthrough led to significant advancements in fields such as physics, chemistry, and engineering, as researchers could now focus more on their domain expertise rather than the intricacies of programming.

COBOL: Bridging the Gap between Business and Technology

As computers became more prevalent in the business world during the 1950s and 1960s, there arose a need for a programming language that could handle complex business operations. Thus, COBOL (short for “COmmon Business-Oriented Language”) was born. Developed by a committee of industry experts, COBOL aimed to bridge the gap between business requirements and technological implementation.

COBOL’s syntax was designed to be easily readable and understandable, allowing business professionals to write code that mirrored their natural language and business processes. This breakthrough democratized programming in the business world, enabling non-technical personnel to contribute to software development projects. COBOL’s longevity is a testament to its success, as it is still in use today, powering critical business systems worldwide.

BASIC: Making Programming Accessible to the Masses

In the 1960s, computer enthusiasts and hobbyists were eager to explore the world of programming. However, most high-level languages at the time were still too complex for beginners to grasp. This gap in the market led John G. Kemeny and Thomas E. Kurtz to develop BASIC (short for “Beginner’s All-purpose Symbolic Instruction Code”) at Dartmouth College in 1964.

BASIC was designed with simplicity and accessibility in mind. Its syntax was easy to learn, making it an ideal choice for beginners and those new to programming. With its interactive nature and user-friendly interface, BASIC played a pivotal role in the early days of personal computing. It empowered individuals to create their software, paving the way for the computer revolution that would follow.

These high-level programming languages, FORTRAN, COBOL, and BASIC, revolutionized the way code was written and opened up coding to a broader audience. They marked a turning point in the history of programming, making it more accessible, efficient, and applicable to various domains. The impact of these languages can still be seen today, as they laid the foundation for the development of countless other modern programming languages.

COBOL’s syntax was designed to be easily readable and understandable, allowing business professionals to write code that mirrored their natural language and business processes.

The Emergence of Object-Oriented Programming: C++, Java, and Python

In the ever-evolving world of coding, one of the most significant advancements came with the emergence of object-oriented programming (OOP). OOP introduced a new way of organizing and structuring code, bringing with it increased flexibility, reusability, and efficiency. This paradigm shift revolutionized the way developers approached software development and set the stage for the creation of some of the most popular programming languages we use today.

C++ was one of the first languages to fully embrace the concept of OOP. Developed in the 1980s by Bjarne Stroustrup, C++ combined the power and performance of its predecessor, C, with the new paradigm of OOP. With its ability to define classes, objects, and inheritance hierarchies, C++ allowed developers to write more modular, maintainable, and scalable code.

The success and influence of C++ paved the way for the development of Java in the mid-1990s. Java was designed to be platform-independent, allowing developers to write code once and run it on any device or operating system. Its simplified syntax, garbage collection, and robust libraries made it a favorite among enterprise applications. Java’s popularity skyrocketed, and it soon became one of the most widely used programming languages in the world.

Another programming language that owes its popularity to the rise of OOP is Python. Created in the late 1980s by Guido van Rossum, Python was designed to be easy to read, write, and understand. Its simplicity and readability attracted developers from various backgrounds, making it an excellent choice for beginners and experienced programmers alike. Python’s extensive libraries and frameworks, such as Django and Flask, have made it a go-to language for web development, data analysis, and scientific computing.

What makes OOP so powerful is its ability to model real-world entities as objects, which can interact with each other through defined relationships. This approach allows developers to create complex systems by breaking them down into smaller, more manageable components. It promotes code reuse, encapsulation, and abstraction, making it easier to build and maintain large-scale applications.

Furthermore, OOP facilitates collaboration among developers by providing a common framework and vocabulary for discussing and understanding code. It encourages modular design and extensibility, enabling teams to work simultaneously on different parts of a project without stepping on each other’s toes. This collaborative nature of OOP fosters innovation and productivity in the coding community.

As the demand for more sophisticated software grows, so does the need for skilled object-oriented programmers. The versatility of C++, Java, and Python makes them valuable assets in various domains, from mobile app development to machine learning. By mastering these languages, developers can unleash their creativity and tackle complex challenges with confidence.

However, the journey of coding doesn’t end here. The future holds exciting prospects, with emerging technologies like artificial intelligence, virtual reality, and blockchain pushing the boundaries of what is possible. As coding continues to evolve, developers must adapt and embrace new paradigms and languages to stay ahead of the curve.

So, whether you’re just starting your coding journey or have been in the field for years, remember that the emergence of object-oriented programming marked a turning point in the history of coding. It opened doors to endless possibilities and set the stage for the dynamic and ever-evolving nature of this fascinating discipline. Embrace OOP, explore new languages, and keep pushing the boundaries of what you can achieve through code!

With its ability to define classes, objects, and inheritance hierarchies, C++ allowed developers to write more modular, maintainable, and scalable code.

The Internet Age: Web Development and Dynamic Languages

In this era of rapid technological advancements, the Internet has become an integral part of our lives. It has revolutionized the way we communicate, access information, and conduct business. Behind the scenes, web development plays a crucial role in shaping the online experiences we encounter every day.

Web development encompasses a wide range of skills and technologies, from designing visually appealing interfaces to implementing complex functionality. At the heart of this process lies coding, enabling developers to bring their creative visions to life in the digital realm.

One of the most significant developments in web development has been the rise of dynamic languages. Unlike static languages, which require code to be compiled before execution, dynamic languages allow for on-the-fly interpretation. This flexibility has opened up a world of possibilities for web developers, enabling them to create interactive and responsive websites.

One such dynamic language that has gained immense popularity is JavaScript. Originally developed for adding interactivity to web pages, JavaScript has evolved into a versatile language capable of powering entire web applications. Its ability to interact with HTML and CSS, the building blocks of web pages, makes JavaScript an indispensable tool for creating dynamic web experiences.

In addition to JavaScript, other dynamic languages like PHP, Ruby, and Python have also made significant contributions to web development. PHP, for instance, has long been the go-to language for server-side scripting, allowing developers to process data, generate dynamic content, and interact with databases. Ruby, on the other hand, gained fame through the Ruby on Rails framework, which accelerated web development by providing a streamlined structure and a wealth of pre-built functionalities.

The Internet age has also witnessed the proliferation of powerful web development frameworks and libraries. These tools simplify the development process by providing ready-made components and standardized patterns. They enable developers to build robust and scalable web applications with relative ease. Frameworks like React, Angular, and Vue.js have become go-to choices for creating interactive user interfaces, while libraries such as jQuery have simplified common tasks like DOM manipulation and AJAX.

Furthermore, the advent of web APIs (Application Programming Interfaces) has revolutionized the way web applications interact with external services. APIs allow developers to leverage functionalities provided by other platforms, enabling seamless integration of services like mapping, payment gateways, and social media sharing into web applications. This interconnectedness has transformed the web into a vast ecosystem of interconnected services, enhancing the user experience and enabling innovative applications.

As web development continues to evolve, staying abreast of the latest trends and technologies becomes essential for aspiring developers. The rise of mobile devices, for example, has led to the emergence of responsive web design, ensuring optimal user experience across various screen sizes. Similarly, the emphasis on website performance and security has given rise to techniques such as caching, content delivery networks, and secure authentication protocols.

The Internet age has undoubtedly revolutionized the field of web development, pushing the boundaries of what is possible and continually raising the bar for user experiences. Aspiring web developers should embrace the dynamic nature of this era and seize the opportunities it presents. By staying curious, adaptable, and continuously learning, they can contribute to shaping the future of web development and create remarkable online experiences for users around the globe.

Aspiring web developers should embrace the dynamic nature of this era and seize the opportunities it presents.

Machine Learning and Artificial Intelligence: Coding for Intelligent Systems

As we delve deeper into the realm of coding, we reach a fascinating intersection where machine learning and artificial intelligence (AI) play a significant role. These cutting-edge technologies have revolutionized the way we approach coding, enabling us to create intelligent systems that can learn, adapt, and make decisions on their own. In this section, we will explore the intricacies of coding for machine learning and AI, and how it has opened up new frontiers in various industries.

Machine learning, a subset of AI, focuses on developing algorithms and statistical models that enable computers to learn and improve from experience without being explicitly programmed. This process involves feeding large amounts of data into models, which then analyze patterns, make predictions, and learn from the results. It is through coding that we provide the foundation for these models, allowing them to process and interpret data effectively.

One popular coding language for machine learning is Python. Its simplicity, extensive libraries, and vast community support have made it a go-to language for many data scientists and AI researchers. With Python, developers can access powerful tools like TensorFlow, Scikit-learn, and PyTorch, which provide pre-built algorithms and frameworks for machine learning tasks. Additionally, Python’s readability and versatility make it an ideal language for rapid prototyping and experimentation in the AI domain.

When it comes to AI, coding takes on an even more critical role. AI systems aim to simulate human intelligence, enabling machines to understand, reason, and act in a way that mimics human behavior. Natural Language Processing (NLP) is a prominent application of AI that focuses on teaching computers to understand and generate human language. Through coding, developers can create sophisticated algorithms that process textual data, extract meaning, and generate responses, enabling chatbots and virtual assistants to interact intelligently with users.

Moreover, coding for AI involves working with neural networks, a fundamental concept in machine learning. Neural networks are designed to mimic the structure of the human brain, interconnected with artificial neurons that process and transmit information. By coding the architecture and parameters of these networks, developers can train them to recognize patterns, classify data, and even generate novel content like images and music.

The impact of coding for machine learning and AI extends far beyond academia and research laboratories. These technologies have found applications in various industries, including healthcare, finance, transportation, and entertainment. For instance, in healthcare, AI algorithms can analyze medical images, detect diseases, and aid in diagnosis. In finance, machine learning models can predict market trends, optimize investment portfolios, and detect fraudulent activities. In transportation, AI-powered systems are enabling autonomous vehicles to navigate roads safely and efficiently.

As the demand for intelligent systems continues to grow, coding for machine learning and AI presents exciting opportunities for aspiring developers. By gaining proficiency in languages like Python, understanding statistical modeling, and familiarizing themselves with AI frameworks, developers can position themselves at the forefront of this transformative field. Whether it’s creating chatbots, implementing computer vision algorithms, or building recommendation systems, the possibilities are immense.

Coding for machine learning and AI offers a glimpse into the future of technology. It empowers us to create intelligent systems that can learn, reason, and adapt like never before. As we continue to explore the potential of these technologies, it is crucial to embrace the evolving nature of coding and stay updated with the latest advancements. By mastering the art of coding for machine learning and AI, we can pave the way for groundbreaking innovations that will shape the world in ways we can only imagine.

As we continue to explore the potential of these technologies, it is crucial to embrace the evolving nature of coding and stay updated with the latest advancements.

The Future of Coding and its Ever-Evolving Nature

As we conclude this journey through the history of coding, it is evident that this field is constantly evolving. The future of coding holds immense potential and promises exciting developments that will shape our world in ways we can only imagine.

One of the key aspects that will drive the future of coding is the rapid advancement of technology. As new technologies emerge, the demand for skilled coders will continue to rise. Whether it’s the Internet of Things (IoT), augmented reality (AR), or virtual reality (VR), coding will play a crucial role in bringing these innovations to life.

Furthermore, the rise of artificial intelligence (AI) and machine learning (ML) will revolutionize the coding landscape. As AI becomes more sophisticated, there will be a need for coders to create intelligent systems that can adapt and learn from data. This opens up exciting opportunities for those interested in the field of AI and ML.

Another significant trend that will shape the future of coding is the increasing emphasis on cybersecurity. With the growing number of cyber threats, there will be a greater demand for coders who can develop secure software and systems. As technology becomes more integrated into our lives, safeguarding sensitive information will be of paramount importance.

Moreover, the future of coding will see a shift towards more collaborative and adaptable approaches. With the rise of remote work and distributed teams, coders will need to adapt to new ways of collaborating and communicating with their peers. This will require strong interpersonal skills and the ability to work effectively in a virtual environment.

Additionally, coding will become more accessible to a wider audience. Efforts are being made to introduce coding at a younger age and make it more inclusive, breaking down barriers and encouraging diversity in the field. This will lead to a more diverse and innovative coding community in the future.

In conclusion, coding is a field that is constantly evolving, adapting, and expanding. The future holds endless possibilities, with technological advancements, AI, cybersecurity, collaboration, and accessibility being key drivers. Aspiring coders should embrace this ever-changing nature and continuously update their skills to stay ahead in this dynamic industry. The future of coding is bright, and it’s up to us to shape it.